AI to Optimize Environmental Perception of Underwater Robots

Poor visibility conditions have a huge impact on the environmental perception of autonomous robots. How can they still operate safely and reliably? In the new DeeperSense project, an international consortium led by the German Research Center for Artificial Intelligence (DFKI) is working on technologies that combine the strengths of visual and acoustic sensors with the help of artificial intelligence (AI). The aim is to significantly improve the perception of robotic underwater vehicles in three use cases from the maritime sector. The project is being funded by the European Union (EU) with around three million euros.

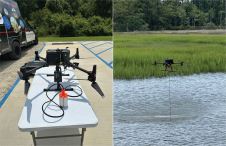

The possible applications of robotic systems are numerous. However, in practice, there is often a lack of functional technologies that enable autonomous robots to comprehensively perceive complex environments. Visual sensors such as cameras, which are used in robotics for tasks such as autonomous navigation, manipulation, mapping and object recognition, provide detailed information about the environment. However, their performance is highly dependent on the prevailing light and visibility conditions. In contrast, acoustic sensors for distance measurement are independent of visibility conditions. They also generate image-like data, but with a significantly lower resolution than cameras. Their functionality is also severely limited at close range.

Intelligent Technologies for Practicable Robot Systems in Complex Environments

This is where the DeeperSense (Deep-Learning for Multimodal Sensor Fusion) project comes into play, which started on 1 January 2021. The overall objective of the project, which is funded under the EU’s Horizon 2020 research framework programme, is to significantly improve the environmental perception of service robots, especially in complex and unstructured environments such as underwater. To achieve this, the project pursues an innovative approach: by using AI, in particular deep learning as a data-driven machine learning method, it aims to combine the strengths of non-visual and visual sensors to optimize their environment perception capabilities beyond those of the individual sensors. This should not only significantly increase the performance and reliability of autonomous systems, but also open up new functionalities and applications for robotics.

The DeeperSense concept will be demonstrated in three use cases from the particularly demanding underwater sector: diver monitoring in turbid waters, exploration of coral reefs, and seabed mapping. For this purpose, the project consortium brings together leading experts in the fields of maritime robotics, AI and underwater sensor technology – the DFKI Robotics Innovation Center, the University of Girona, the University of Haifa and Kraken Robotik GmbH – with end users from the three different application areas – the German Federal Agency for Technical Relief (THW), the Israel Nature and Parks Authority and Tecnoambiente SL.

Improved Environmental Perception in Underwater Applications

DeeperSense is based on the overarching concept of intersensory learning, in which a sensor modality B learns from a sensor modality A. This way, sensor B can provide output that is similar to that of sensor A – in terms of accuracy as well as the type of output and interpretation of the data. For this purpose, sensor A, for example a camera, and sensor B, for example a sonar, observe the same scene simultaneously. The collected low-resolution sonar data serves as input to an artificial neural network, while the high-resolution camera data serve as output. By gradually adapting the network to the desired output, it learns about the relationships between the input and output data. The result is an algorithm that, once sufficiently trained, is capable of generating a camera-like image based only on the low-resolution sonar data. In the project, this concept will be realized in three core algorithms that meet the specific requirements of the use cases.

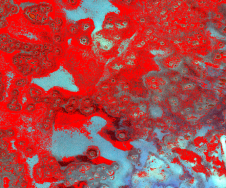

In the first use case, an autonomous underwater vehicle (AUV) is used to monitor divers at work in turbid waters. Usually, a remote-controlled robot with a camera is used for this purpose, which transmits its data to a control station. However, since optical sensors can only be used to a limited extent underwater, the project partners are also equipping the AUV with a sonar sensor. In DeeperSense, they want to teach this sensor to deliver camera-like images that can be easily interpreted by human personnel at the control station.

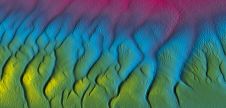

The second use case deals with the robotic exploration of complex and sensitive underwater structures such as coral reefs. In order to create detailed environmental maps for reliable obstacle detection, the project relies on a combination of visual and acoustic sensors. Here, the challenge will be to reconcile the differing image perspectives. For this purpose, the planned algorithm must be able to recognize objects identified in the data from one sensor in the data of the other. This way, autonomous robots will be able to navigate through a coral reef instead of just going over it.

Finally, in the third use case, the partners want to enable an AUV to generate precise maps of the seafloor. These surveys are typically carried out by ships, which is very expensive. Cameras usually provide supplementary information to verify acoustic data. However, this method is not practical in the darkness of deep waters. In the project, the AUV will use various acoustic sensors to classify sediments on the seafloor. With the help of the DeeperSense concept, the acoustic sensors learn to produce annotated maps of different types of seafloor, just as detailed as would be possible with cameras under good visibility conditions.

Algorithm Development and Optimization

During the project, the partners will generate comprehensive training data for the three use cases. The algorithms will be trained using this data and tested under real conditions both in the laboratory and in extensive field tests – in inland waters, in the Mediterranean and the Red Sea. Eventually, the trained algorithms will be optimized for on-board use by underwater vehicles in order to enable real-time execution to support the autonomous robot’s behaviour. The consortium intends to make the results available to the robotics community in online repositories embedded in European research infrastructures.

Source: German Research Center for Artificial Intelligence.