Technology in Focus: Hydrographic Processing Software

In What Direction is Progress Headed?

Discussing and comparing software has often been tricky in Hydro International as it is difficult to put the packages together and see clear differences. In this overview of hydrographic processing software, we will highlight trends and movements as indicated by the responses from software suppliers to our questions. In addition, we have added to this our own observations. All in all, this makes for interesting reading as there are movements in the industry that also tell us where the profession is headed.

By Joost Boers, Hydro International, The Netherlands and Lars Kårlin, Data Processing manager, MMT, Sweden.

Firstly, we would like to establish what ‘hydrographic processing software’ is. Where does ‘processing’ start and end? Most suppliers start with the acquired data; in most cases the raw data are the starting points for the surveyors working on the set. This even may be live data. Beamworx defines the time that the data is released by the acquisition software as starting point for its specific data patch and data cleaning software.

The ‘processing’ terminates at the time that the georeferenced data is ready to create deliverables like 2/3D models, volume calculations for dredging, contour maps, habitat maps, etc. Most packages can be tailored to fit the workflow of the end-user.

Typical Users

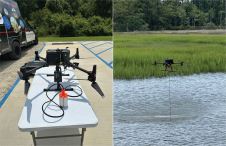

Who uses the package? According to the suppliers the packages are extensively used in nautical charting and Oil & Gas. Dredging is also an application that was mentioned often. QPS mentioned an extension of the application towards terrestrial data: highway profiling surveys and terrestrial laser scanning surveys. This may sound new and surprising, but a multibeam echo sounder also creates a point cloud. HYPACK has also taken a step towards the ‘terrestrial’ having launched a version for processing Unmanned Aerial Vehicles (UAV) data. Eiva allows the user to add any type of point cloud, such as those derived from terrestrial laser scanning or photogrammetric surveys and display those together with the typical multibeam survey data.

More Data Sources

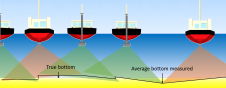

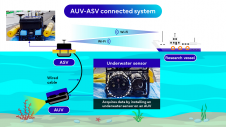

Most packages facilitate live data monitoring and processing and strive to minimise the lag between logging of a survey line and the start of the post-processing of the same. This is becoming more important as the additional data is collected by either the same sensor or by additional sensors, often working simultaneously. While more data is logged per time unit, a typical turnaround time from acquisition to a preliminary dataset at the clients disposal is not seldom around 24-48 hrs. This puts a significant strain on the operator of the processing software. Sensors are not placed uniquely on a vessel anymore, the number of possible platforms has been increased over the course of time. Think of AUVs, USVs, or ROVs. One of the trends visible is a greater number of data sources that need to be ‘handled’.More data is available from one source. Think of water column data and backscatter that can be analysed for further information. Coming to this point, most packages are suited to process the data from different sensors. Only Kongsberg SIS QA and, at the moment, Ocean Contour from Ocean Illumination are dedicated to Kongsberg sensors and Nortek Signature series acoustic Doppler Profilers respectively. The latter is designed to open up for more instruments.

Being able to process the data from more than one kind of sensor or various types of data from the same sensor (such as backscatter and water column data) in one software suite is judged to be an advantage on most occasions. Also from the perspective of the operators, this preference is present: they just need to be familiar with one package to work with all incoming data. Keeping the software generic towards sensors ensures the optimal adaption towards applications and requirements from the business or science on the one hand and technical developments such as the introduction of new sensors on the other hand. Organisations that work on a project basis can keep the same software and use the sensors that are best for that job.

Operational Aspects

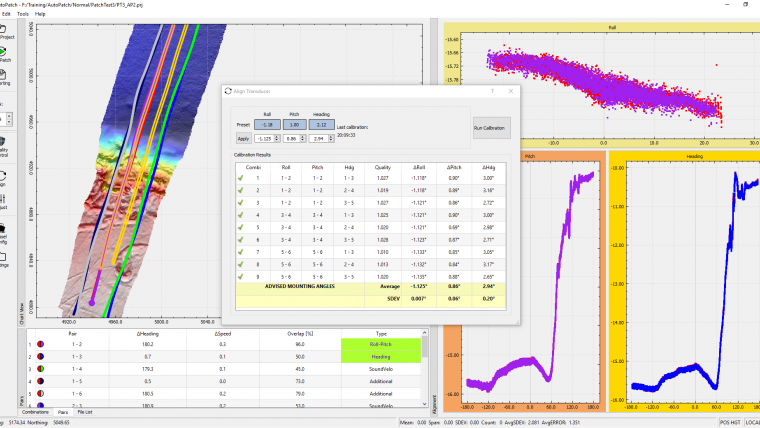

From an operators perspective, there are significant differences between the packages, with the most prominent difference being that some packages comes bundled with an acquisition part and others do not. Some advantages of such a solution is the transfer of online settings such as lever arm and angular offsets without requiring additional user input, thereby removing one plausible source of error. It also allows the user to move back and forth in the workflow with less time delay, and so helping to increase the efficiency of the processing.

This brings us to the workflow. Most packages allow the user to define the workflow and the solution wraps around this. Most of them can work with the raw data and allow live (real-time) monitoring and near real-time processing, putting efforts to even optimise this further. Teledyne Caris Onboard has been developed with real-time processing in mind - especially when using autonomous survey platforms.

Looking to the Future

As we are talking trends, it is interesting to see where everything is heading. Some aspects have already been touched on. The main trends we see in the development of hydrographic processing software are mentioned below.

- More data. The data comes from more than the traditional sources (single beam, multibeam echo sounders, SVPs, ADCPs…) and it becomes more data-intensive. Multi-head echo sounders, integration of (underwater) laser scanning, photo, and video are multiplying the amounts of data. Increased survey speed as a result of improved sensor performance further increases the amount of data being acquired per time unit. Processing power of the hardware will increase as well.

- Big data. With an increasing demand for cost efficiency there is an obvious market for automated tasks in a bigger picture than what is seen today. If methods that allow machine learning to take place are made available for the service providers, not only the processing time can be trimmed but also the repeatability may be improved, reducing costly rework.

- Storage and accessibility of the data. The amount of data is increasing, the cost of data storage (in the cloud) is decreasing. More data will be created and stored in the ‘cloud’: an online data storage that is available from various parts of the world. As the (satellite) communication costs are also decreasing, real-time access of data from around the world is facilitated. This means that an operator can work with the data collected somewhere completely different in a short period of time after acquisition. And after processing, the data is made available to the end-user much more quickly.

- An AUV or USV (or even more!) can be operated from one vessel, all collecting data that needs monitoring and quality checking – then needs to be put together and processed towards the same standard. The human intervention will be reduced and especially routine jobs in data processing should be made automated, maybe even in the vehicle (in case of an AUV or USV).

- Visualisation is already important for the judgement of the data quality. This aspect is to gain additional interest among hydrographic (and oceanographic) professionals. From some of the packages we notice a distinct focus on improving the visualisation experience for the end-user. The 3D (and sometimes 4D) environment that is the workplace of a hydrographic processor constitutes a large part of that persons working environment.

- The software, especially the user interface, will be simplified and will even get an app approach, making it user friendly even for people who are not familiar with a particular package.

Another challenge that will have an impact on the processing of hydrographic (and oceanographic) survey data is, for example, crowdsourcing. This will enable more seafloor surface to be mapped, but it results in varying data quality and other issues may potentially need to be overcome before they can be matched with existing or adjacent surveyed areas by other organisations.

More Information

Find specifications and suppliers of hydrographic processing software solutions on Geo-Matching.com.

Joost Boers has held various positions at Geomares Publishing since 2006. In October 2013, he became editorial manager of Hydro International. He was a council member of the Hydrographic Society Benelux from 2008 to 2014.

Lars Kårlin is Data Processing manager at MMT AB with his base in Göteborg, Sweden. With a background as offshore data processor, he now leads a team of data processing specialists based all over Europe who crew the vessels in the MMT fleet.

Geo-Matching.com

For an overview of characteristics of hydrographic processing software see Geo-Matching.com: https://geo-matching.com/hydrographic-processing-software

Value staying current with hydrography?

Stay on the map with our expertly curated newsletters.

We provide educational insights, industry updates, and inspiring stories from the world of hydrography to help you learn, grow, and navigate your field with confidence. Don't miss out - subscribe today and ensure you're always informed, educated, and inspired by the latest in hydrographic technology and research.

Choose your newsletter(s)